Dive Brief:

- Trane Technologies is launching a comprehensive thermal management system reference design for gigawatt-scale AI data centers, the building and industrial solutions company said last week.

- Reference Design #501 is the first thermal management reference design engineered around the NVIDIA Omniverse DSX blueprint for AI factories capable of running NVIDIA’s next-generation Vera Rubin chips, Trane says.

- The reference design is “built for extreme efficiency and maximum uptime” in a power-constrained world, David Brennan, Trane vice president of innovation and product management excellence, said in an email.

Dive Insight:

Data center developers and tenants — including the big technology companies known as hyperscalers — increasingly see power availability as the biggest brake on their efforts to deploy more high-performance computing capacity.

Grid constraints already hinder data center development in key U.S. markets, such as the Pacific Northwest and the Mid-Atlantic region. The crunch has led to plummeting vacancy rates in data centers and spiked energy prices on the power grid serving northern Virginia, the country’s biggest data center hub.

Electric utilities are exploring creative solutions in response. Portland General Electric, for example, is working with a Stanford University spinoff to boost spare capacity on its grid, enabling new data centers to power up without waiting years for expensive transmission upgrades.

But data center efficiency is an important piece of the puzzle — not only for speeding “time to power,” but for boosting revenue from AI token conversion, Brennan said.

“Every watt of power we save enables our customers to generate more revenue,” Brennan said.

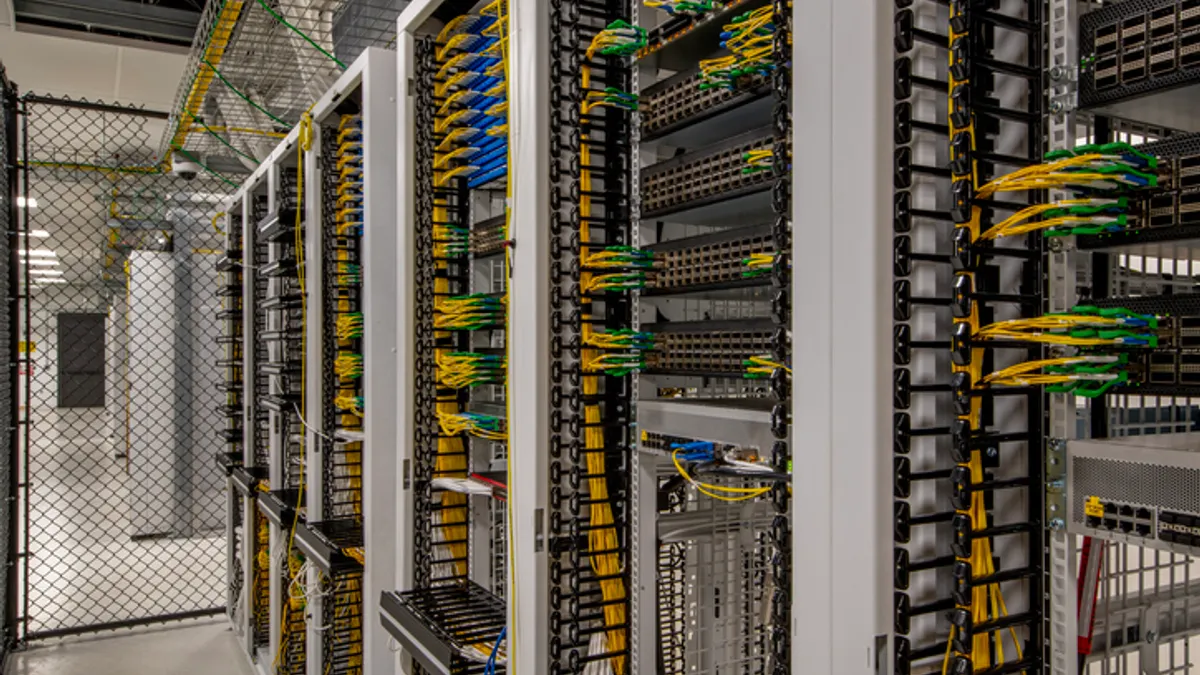

The reference design employs high-efficiency water-cooled chillers with advanced controls to minimize onsite energy consumption and free up more power for computing. So-called swing chillers can shift between high- or low-temperature loops, reducing the number of chillers needed to achieve the same results. Dedicated temperature loops help maximize “free cooling” hours, when operators can use ambient air rather than mechanical chillers to cool equipment and whitespace.

Brennan described Trane’s approach as a way to “flip the script” from traditional chip- or rack-level solutions. The solution works at the facility level, coordinating water-cooled systems with adjacent products like dry coolers to optimize power usage effectiveness, or PUE, while all but zeroing out water consumption under certain ambient conditions, he said.

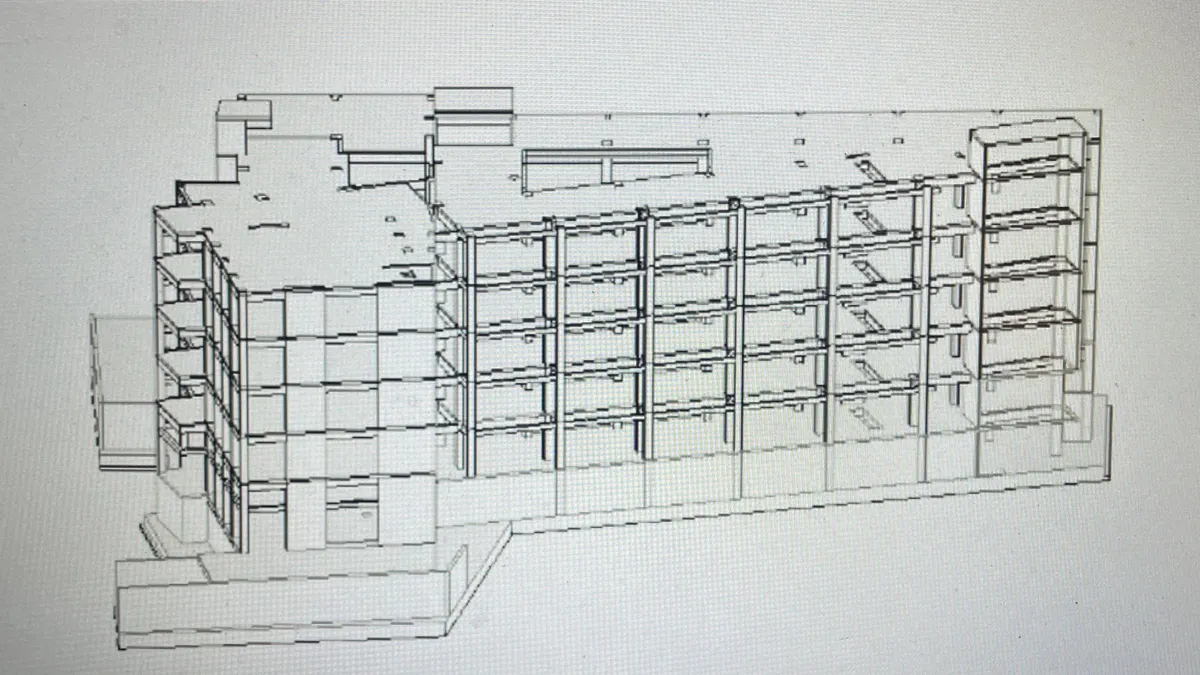

Trane’s reference design incorporates other emerging aspects of data center design, like digital twinning, Brennan said. It joins building solutions providers like Schneider Electric and ETAP, a power systems design and operations firm, in developing digital twin capabilities for NVIDIA’s most capable data center blueprints.

Trane’s design integrates the NVIDIA Omniverse DSX blueprint for AI factory digital twins, enabling system designers to aggregate three-dimensional data, simulate performance under a range of loads and conditions, and optimize the thermal system before beginning construction, he said — future-proofing facilities that cost billions of dollars to construct yet depreciate rapidly.

“The digital twin is the key to continuous optimization and future-proofing these complex facilities,” Brennan said.